These days, online surveys have become the norm among the market research community, as they allow for the collection of a large amount of data within a short period. The biggest challenge facing the research community is now: 'How do we maintain high response rate?'

Online research has certainly proven its worth to many clients through the quality of research and the ability of brands to conduct fast and flexible surveys that doesn't break the bank. But at Decision Lab our ethos is to test more and test faster in order to employ the best and most agile methods for marketers.

Now our question is: 'How can we improve our online surveys?'

At Decision Lab, our dedicated and innovative team works tirelessly to create agile, connected and decision focused solutions, and constantly challenge themselves to optimise our online research.

In this post, we argue why Response Rate is the best quality indicator of an online survey, and outline the best practises to increase it.

Why should we care about Response Rate?

We live in an era of enabling. Creating and hosting a survey has become easier than ever before thanks to new technology platforms and tools. Yet, access to tools doesn’t necessarily translate to good results. A beautifully designed survey is not enough to get good data, you also need a good response rate.

A good response rate provides four major benefits:

- Higher data quality and accuracy: The easier it is to respond to a survey, the greater the chance respondents will answer truthfully. Badly designed surveys will see people dropping out or clicking through senselessly.

- More representative sample: Having fewer people drop out mid-survey means your results will deviate less from your targeting goals.

- Reduce the need for incentives: If your survey is short, relevant and engaging, doing the survey will, in itself, feel like a rewarding experience. That means you will receive good survey data while avoiding offering the kinds of incentives that can induce cheating.

- Community engagement: The last survey experience leaves a strong lasting impression on a respondent, so it’s important to ensure respondents aren’t frightened away by a long and boring survey. This is the first step to a good community development process.

At Decision Lab, we play the dual role of both the user and the manager of our online community. On one hand, we want to extract as much information for our clients as possible and on the other, we are also take the responsibility of taking care of our rapidly growing online community very seriously. That’s why we not only make every effort to keep our surveys short, relevant and engaging, but we also come up with a model to predict how well our surveys will perform.

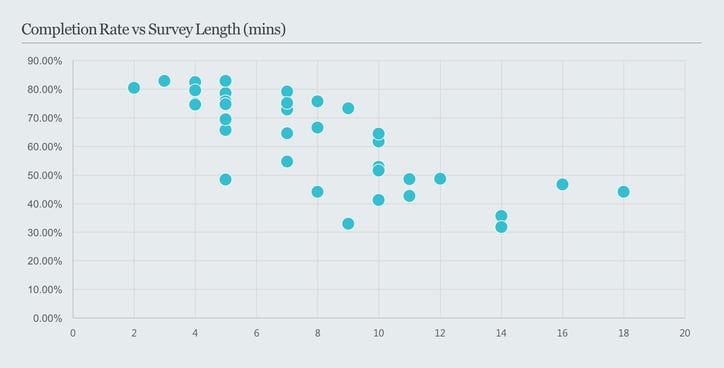

R² = 0.58237

The Method

In order to understand what happened to survey response rates as survey length increases, we analyzed responses and drop-offs in aggregate across all of the recent surveys we scripted and hosted on our survey platform. We defined response rate as the likelihood that a survey starter completes the survey, and calculated it from the respondent statuses that our system captures. The formula is as follows:

Because we believe that when it comes to our respondents’ efforts, not all question types are created equal, we decided not to use number of questions as a measure of survey length. Instead, we used the median response time that our platform collects for each survey.

In addition, we also looked into other survey attributes such as sampling patterns, intro page configurations, different question types compositions, skip logic complexity, the use of media (images and videos), and the survey topics.

Every minute counts

The first important finding was that survey length has a major impact on response rate. As expected, the longer the survey, the less likely respondents finish it. A simple bivariate analysis between survey length in minutes and completion rate can show a lot.

Survey length accounts for more than a 54% of the variability in the likelihood of a respondent completing our surveys. Suppose we have a 1-minute long survey, only 85 out of 100 respondents will complete it. And for every extra minute added to the survey length, we lose an extra 3 respondents. A 20-minute survey will likely see only 30-40 people finishing it. It’s a cautionary tale that every Decision Lab consultant is familiar with.

At Decision Lab, we use various survey design best practices to keep our surveys short, meaningful and engaging to our respondents. Contact us to learn more about how we can design high quality surveys for your brands.